Follow @PistonDeveloper at Twitter!

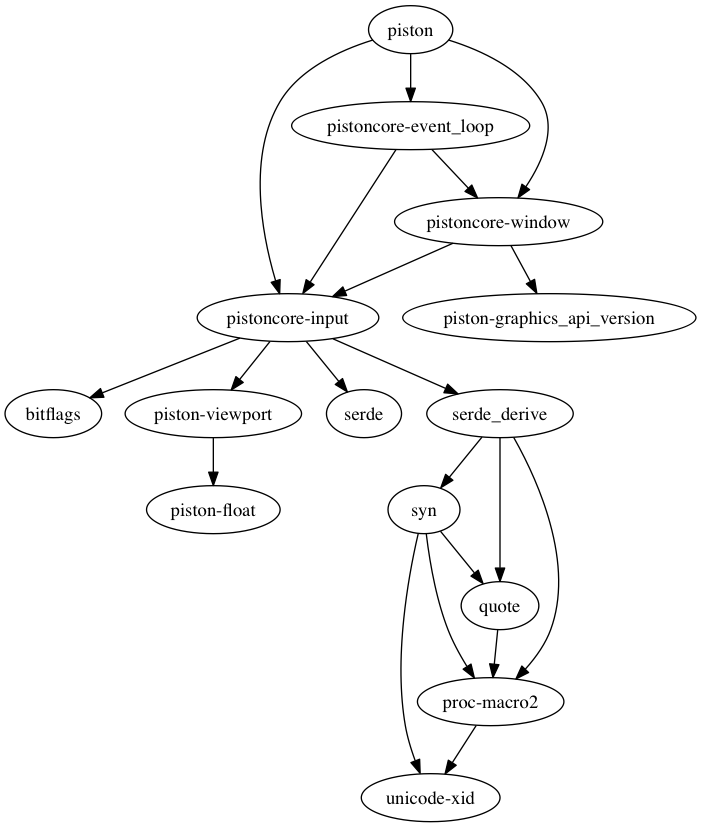

This is an announcement that the core library pistoncore-window has been stabilized. Version 1.0 is released!

The ecosystem has been upgraded (with help from Eco, as usual).

What this means to end-users and maintainers, is that the Piston ecosystem will be more stable from now on, with fewer version bumps.

Piston has been designed from the ground up to enable stability and modularity. Looking back, I am happy that the architecture has been delivering this promise very well:

- To an end-user, using Piston should be like writing idiomatic Rust code (trait for Window etc.)

- To a maintainer, using Piston should be independent on the OS platform or hardware

Special thanks to

These 12 people (including myself, bvssvni) are listed as authors of the Piston core:

- bvssvni

- Coeuvre

- gmorenz

- leonkunert

- mitchmindtree

- Christiandh

- Apointos

- ccgn

- reem

- TyOverby

- eddyb

- Phlosioneer

Stability and modularity

Most of the collaborative work in Rust Gamedev goes into standalone libraries which are shared among projects. Besides the 90% of code that goes into an average game, 10% is about stuff that one could call “game engine”. Out of this 10%, there is 9% which are independent libraries. Only the remaining 1% is a glue system for the ecosystem of libraries that orbit projects.

Piston is a glue system for game engines. It enables library maintainers to write code for targeting multiple game engines. Here, a “game engine” is defined as a set of libraries used to build a specific game.

The way people use Piston is that they can opt-out or work around almost everything. This is how it should feel like to end-users: It should be like writing Rust code. Rust has a standard library, but it is not necessary. In that way, Piston tries to follow idiomatic Rust.

Piston does not try to solve productivity problems with just one solution, because there is not one unique way to be productive. When I want to be productive, I write a Dyon api on top of a customized game engine. I created Dyon, so my love for it is biased, but I still use it on a daily basis. However, I also frequently develop customized domain-specific languages using Piston-Meta. Other people prefer other scripting languages or other techniques to stay productive. Most of these techiqnues do not require a lot of code.

Therefore, the primary objective of Piston is to provide stability and modularity.

Recommendations to new Rust gamedev developers

There is a saying “there are 50 game engines in Rust, but only 5 games”. This is not actually true, but it says something about a particular mindset. In reality, I have written over 50 small games, just for myself, but they are written in Dyon, not Rust. However, Dyon is written in Rust, so… it depends on how you count games.

The reason people say this is that most game engines in Rust are glue code designed to achieve productivity. Since people have different opinions and ways to think about productivity, you get many game engines. The people who make all these game engines, are probably the ones that do not use scripting for productivity. To people who like this kind of productivity style, I do not have particular recommendations, since there are so many good alternatives. Just pick one.

If you are making a game and you don’t have high requirements:

- Just pick a game engine that suits your style of productivity

- You can always switch game engine later

If you are making a game and have high requirements about features:

If you are making a game and require highly customized architecture for your game engine:

- Piston

Piston’s architecture helps you to modify the design and change it later.

If you want to ship a game and come from a C background:

- Just use SDL2, write the code and ship it (:P)

- Accelerate productivity by gradually importing libraries (e.g. use Piston’s SDL2 backend)

There are very few libraries in the Rust gamedev ecosystem that have reached stability. When you get into Rust, you take risks, so you have to trade these risks with other benefits.

One benefit is that in Rust there are usually fewer bugs per line of code compared to e.g. C++. This is because, not that C++ produces bugs, but that in C++ you have to ask for guidance from the compiler, while in Rust you get it by default.

Another benefit is that Rust concurrency is memory safe.

However, except from that, everybody knows that the choice is not about Rust vs C++, but which scripting language you will use to stay productive (:P).

Here are some: